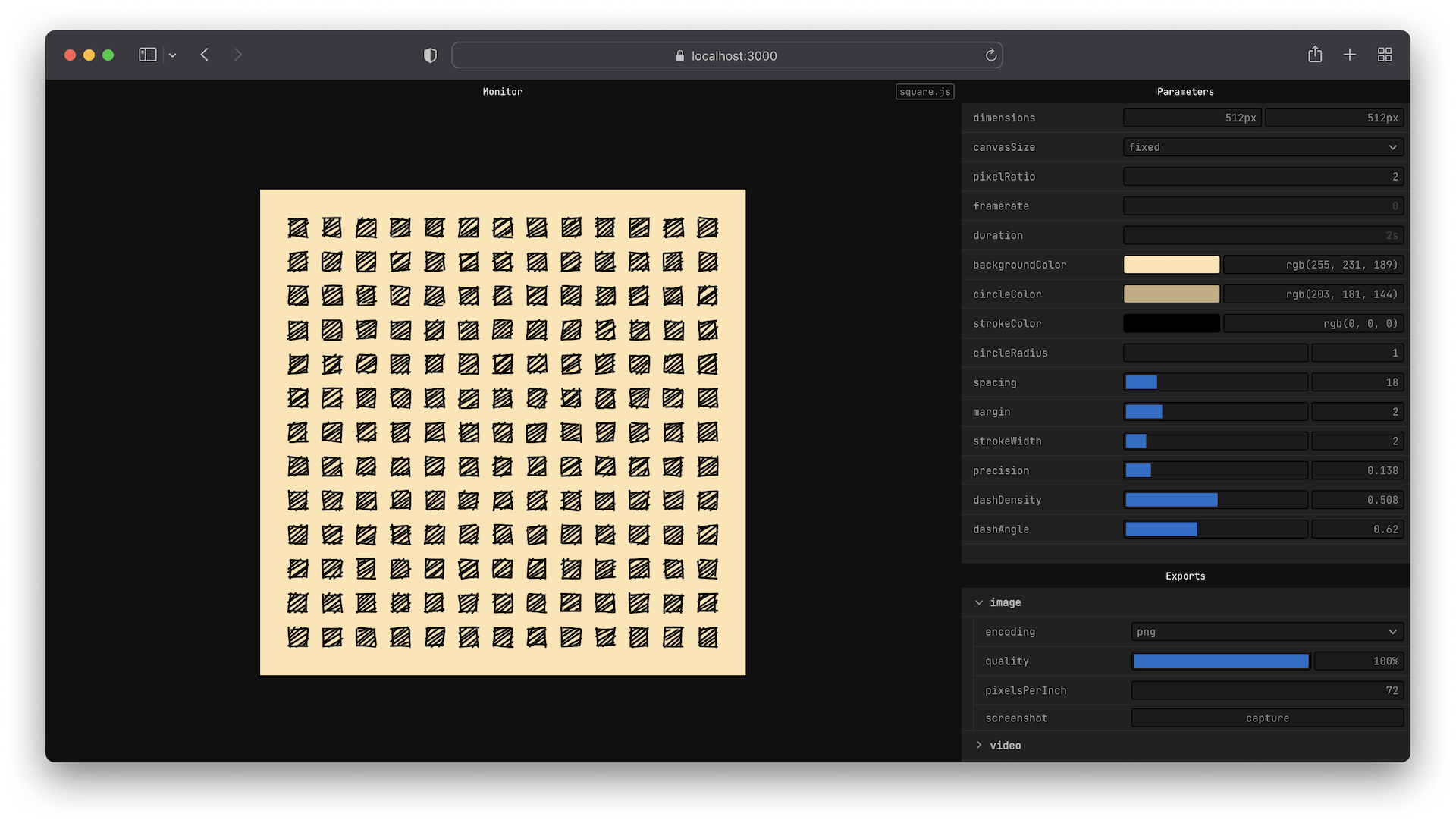

I decided a few years ago to start working on a development environment that would fullfill the variety of my needs while working on client and personal projects, from sharing a prototype online to publishing generative art on my social networks.

This project became Fragment, which I made publicly available on my Github in September 2022.

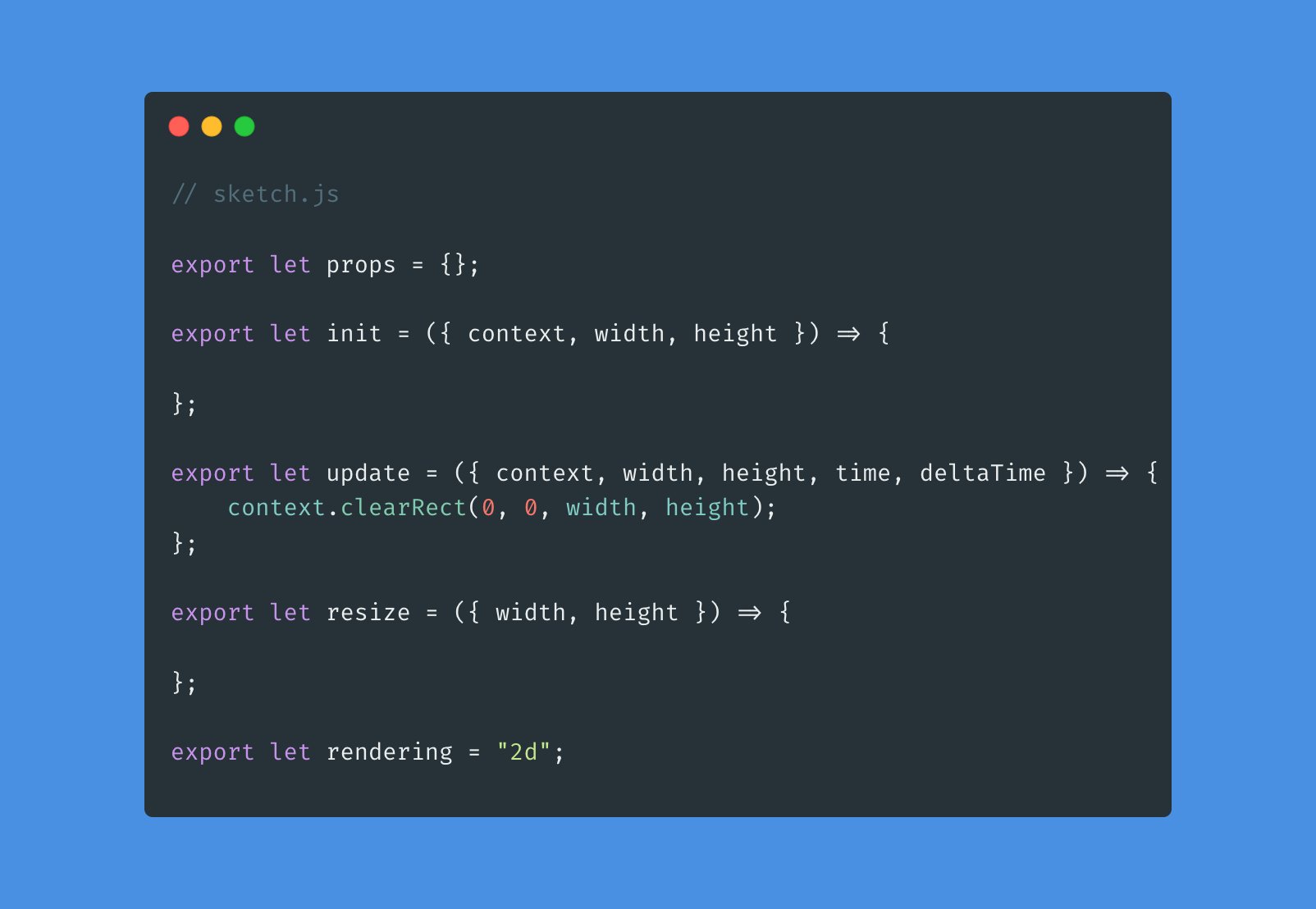

Fragment is built upon the concept of sketches, a single file with ECMAScript modules named exports acting as hooks in a rendering pipeline.

Agnosticity

My work being based on a various use of <canvas>, I needed the tool to work with 2D contextes, different WebGL libraries such as THREE.js or OGL, and fullscreen fragment shaders. To achieve that, Fragment works upon the concept of renderers, that run specific code over a canvas node before going into the lifecycle methods of a sketch.

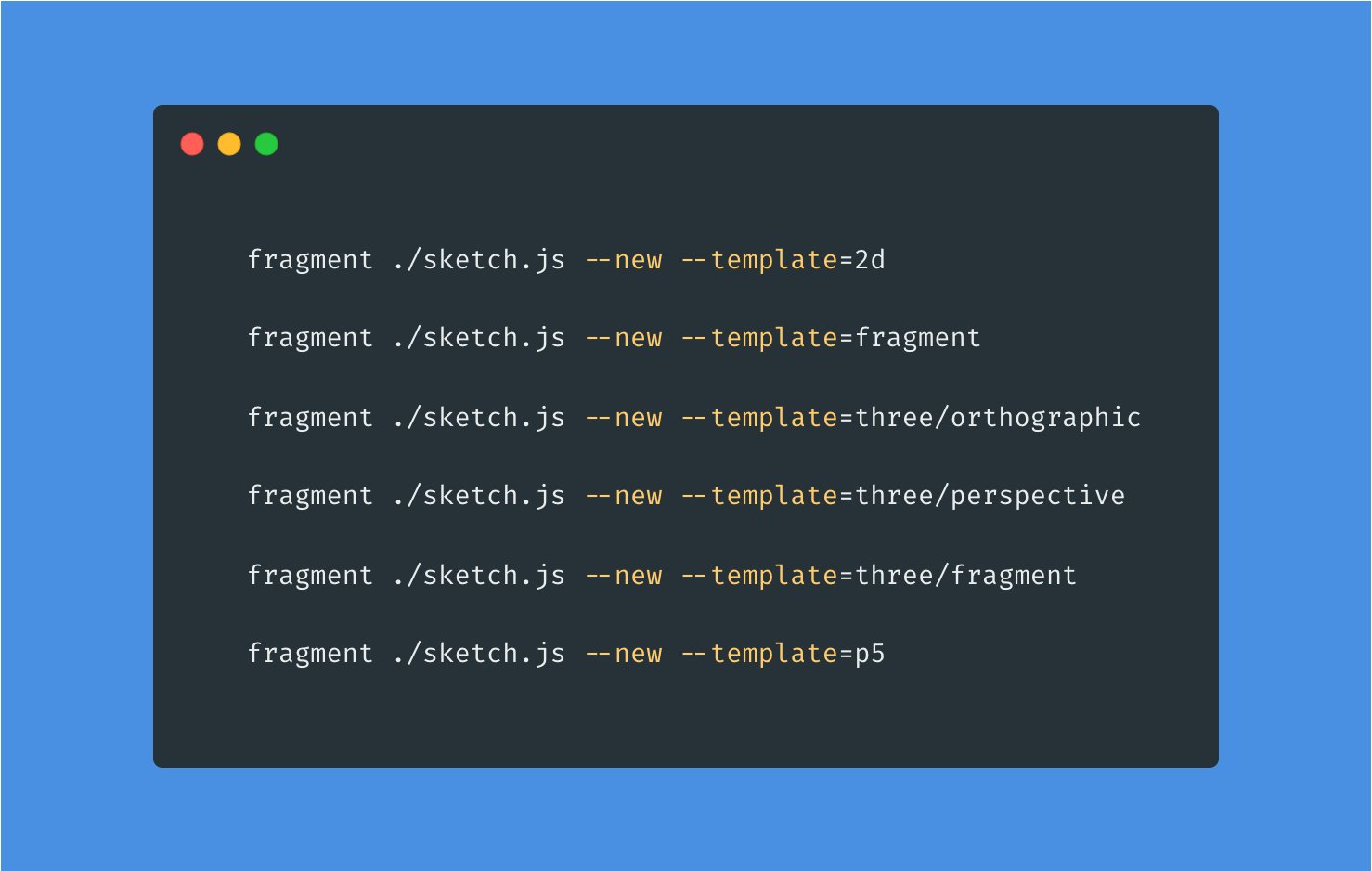

To simplify the usage of the most frequent types of sketches, Fragment comes with built-in templates so I can start working after running a single command.

Fragment also supports custom renderers by using the right hooks and syntax.

Speed

I spent a fair amount of time in my career speeding up cold start and compile times of different workflows, as I fairly believe waiting for your code to compile is just a waste of time. When I arrived at Akufen in 2015 and until I left in 2019, I always tried to speed up our front-end workflow from switching our task runner from Grunt to Gulp then to Node processes, moving away from Browserify to Webpack, testing the first release of ESBuild… When I started freelancing, I rewrote my entire front-end workflow from scratch to use ESBuild, created a plugin system so I would be able to livereload styles, and enable hot shader reloading.

It was obvious from the very start that Fragment needed to feel instant, which was not the case with the first version. After different attempts and discovering the nightmare resolving modules imports is, I decided to use Vite as the foundation of Fragment. It was, indeed, very fast. I had to dive deep into it to make the environment works the way I intended in the first place: instant reload of sketch files, preserved UI… I also ported my hot shader reloading plugin to the Vite plugin system, by using a dedicated Websocket.

Finally I had a dev environment which was starting almost instantaneously, reloaded shaders in a few milliseconds while preserving scenes and all of that without a page refresh. So long Cmd+R, you will not be missed.

Exports

Now that I had a fast agnostic development environment that speed up my working process, I needed a way to improve how I could share this work to others, from posting my personal generative art on social medias to showing progress to my clients.

Thankfully, canvas-sketch already paved the way with a great workflow on the subject, by providing keyboard shortcuts that would either take a screenshot of the canvas or exporting frames of a sketch to be assembled by another tool like ffmpeg.

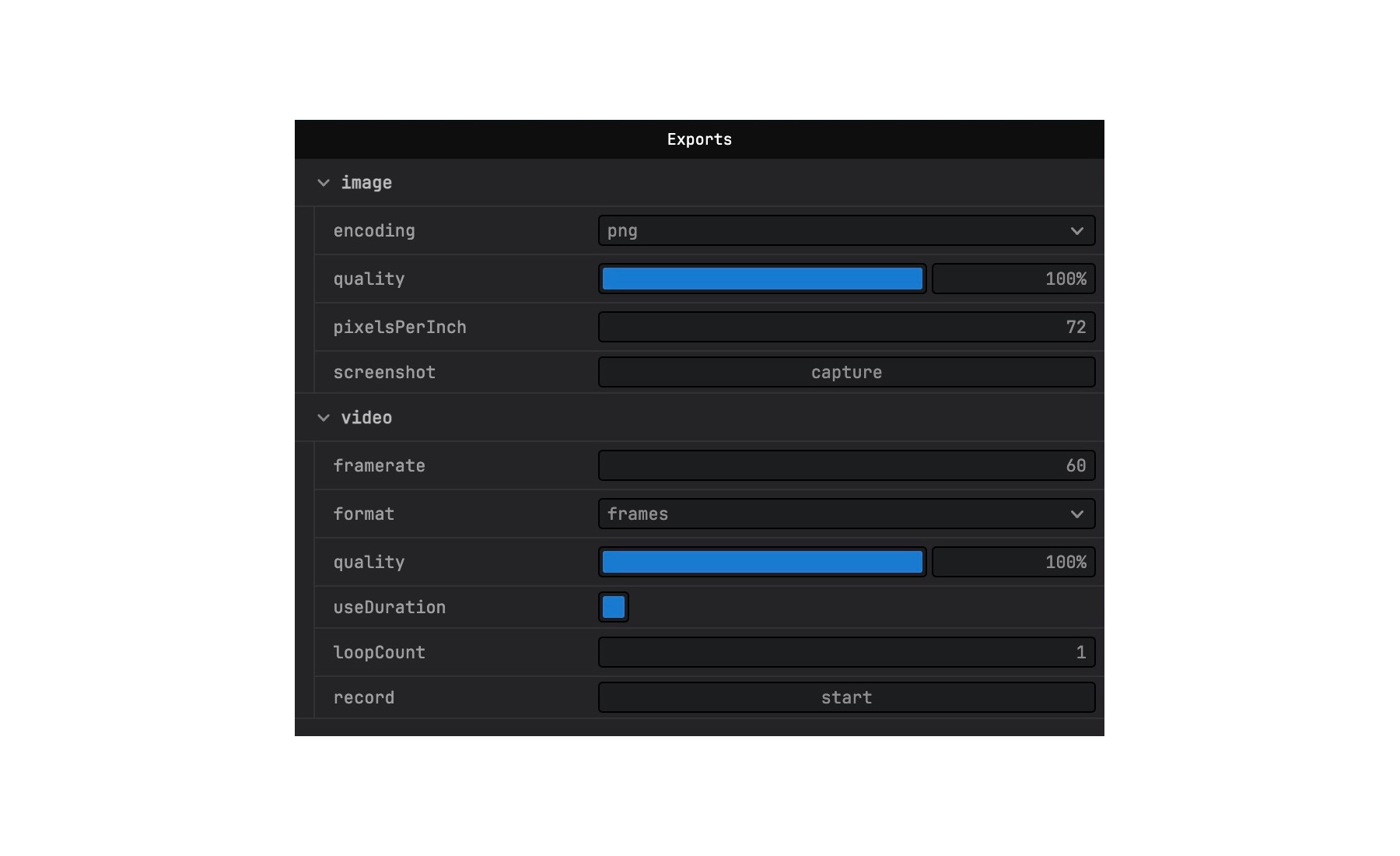

Screenshots

I implemented a similar behaviour for screenshots by overriding the Cmd+S keyboard shortcut. Screenshots can be exported to PNG, JPEG and WEBP formats. Under the hood, Fragment calls canvas.toDataURL() and creates a Blob from it. The Blob is sent to the server through a POST request in order to get a response, which is not possible through Websocket. On the server side, the request body is parsed to create a file on disk. Thanks to the HTTP Response, the client can fallback to a native browser download if the save has failed for some reason.

Videos

Thanks to new tools and APIs available in browsers, I was able to create Webm, MP4 and GIF video files directly in the browser by using different open-source encoders publicly available on Github:

- gifenc for GIF encoding

- WebmWriter for WebM encoding

- mp4-wasm for MP4 encoding

It’s also possible to export an animation frame by frame like canvas-sketch does.

Static build

Last but not least, sketches can be exported to static files thanks to Vite by using a --build flag. This enables live deployment of a sketch, which is quite useful when you need to share a work in progress and hand over a sketch for someone to play with. I also deployed sketches just by pushing to a git repository thanks to Netlify.

Interface

I wanted the UI to be fully resizable as I can work on different canvas sizes or different monitors. And while building new features into Fragment such as a MIDI Panel or the console, it became obvious quite fast that these things shouldn’t always be there, but rather brought on screen when needed, and hidden away in a instant.

It took me some time but in the end I was able to develop this flexible interface with a recursive grid where columns can be divided in multiple rows, and rows can be divided in multiple columns. Then each column and row can hold a Module, such as the monitor, the parameters or the console. This makes for a fluid interface than can be changed in a few clicks.

The layout data is serialized on every change to be saved into Local Storage and retrieved between sessions.

Interactivity

I love interactions. I think there’s truly something unique at inviting final users to “join” a creative project. That’s why most of my work is interactive, at least in some way.

Even if a project is not mean to be interactive, it can be also helpful to be able to interact with a sketch in the making of a project. It can bring debug mode to screen, fasten the creative process by avoiding too much back and forth between the code and the screen, or whatever you can think of…

I like to use a MIDI keyboard to trigger functions or test different values of sketch properties. However, using MIDI in JavaScript can be a bit tedious. The Web MIDI API is powerful but also complex and just reacting to MIDI inputs requires a lot of code. I wanted to have built-in support for MIDI inputs in Fragment, with the ability to configure them on the fly.

While developing the system for MIDI, I thought I could abstract inputs as triggers to also include mouse and keyboard events. Triggers can be attached on the fly to props from the Fragment interface. This is useful in the process of creation, to quickly map a range input to a MIDI control for example. They are saved in local storage to preserve the configuration between refreshes and work sessions.

But triggers can also be used declaratively in the code to be able to ship interactive sketches.

import {

// Mouse triggers

onMouseDown,

onMouseUp,

onMouseMove,

// Keyboard triggers

onKeyDown,

onKeyUp,

onKeyPress,

// MIDI triggers

onNoteOn,

onNoteOff,

onControlChange,

} from '@fragment/triggers';

function changeColors() {

//...

}

export let init = () => {

onKeyDown('C', changeColors);

}Triggers are automatically destroyed and reinstanciated between sketch reloads so you don’t need to clean them up manually.

Roadmap

There is a lot of features I want to add on Fragment but for now I’m prioritizing making what is already there works without bugs! In a nutshell, this is what I would like for it to be:

- Website/docs

- Improve support for P5.js

- Audio integration with beat detection

- Webcam integration

- Tensorflow integration

- Open sketch monitor in another window

I’ve read a lot about open-source projects founder getting burned out quite quickly sometimes, so I’ll take the time it needs to do those.

As I’ve been using on every client and personal work I had for two years now, I’m excited for the future of this project.